A Solution for AI’s Bias Problem

As artificial intelligence gains momentum, University of California researchers are identifying discrimination in the algorithms that are shaping our society, devising solutions, and helping build a future where computers do us less harm and more good.

Zubair Shafiq, professor of computer science in the UC Davis College of Engineering, researches the problem of AI magnifying biases and showing “conspiratorial or problematic” content to users on social media. Shafiq notes that developers who train AI systems to prioritize engagement, like clicking on articles or commenting on videos, can contribute to this problem.

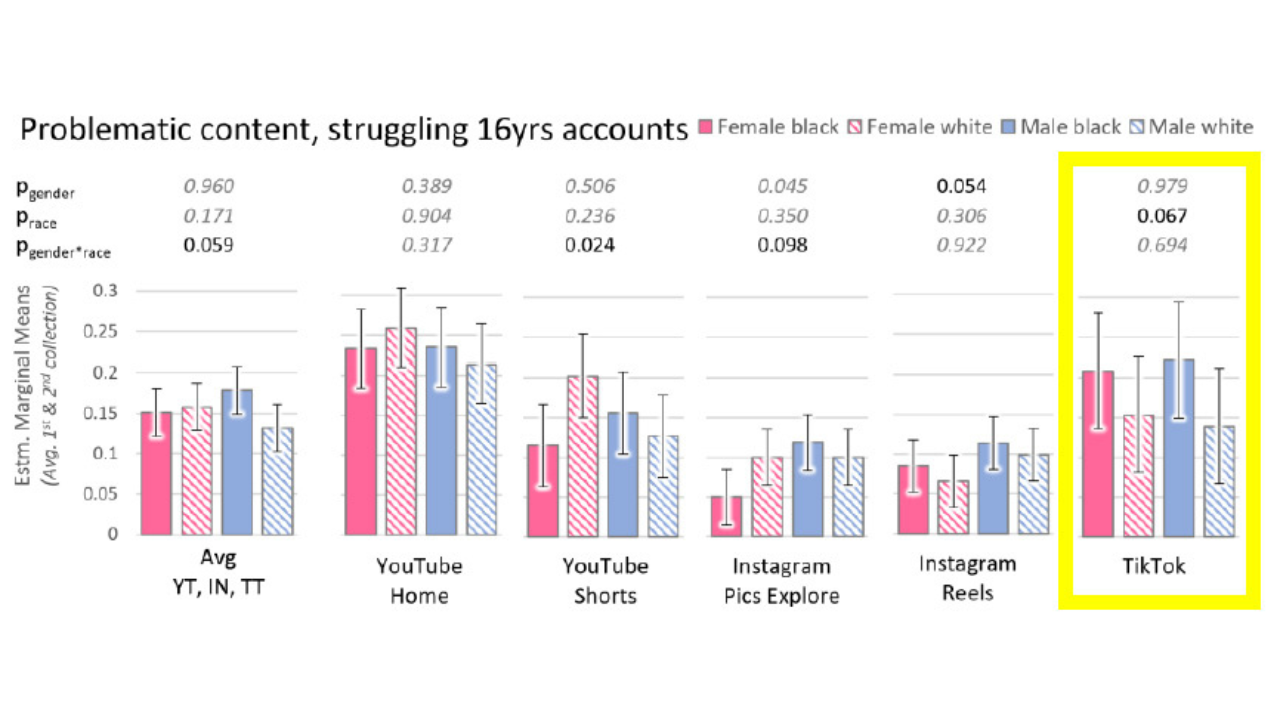

In a 2023 study, his team found that YouTube is likelier to suggest problematic videos to users at the extremes of America’s political spectrum and even more so the longer a user watches. In another study, they found that TikTok perpetuated biases based on a user’s profile photo, showing that user different content based on perceived racial identity.

The solution? Corporate accountability and government regulation.

Shafiq is eager to see research like his be considered at tech companies like YouTube and Meta. With this research in hand, he believes they can make tweaks to their algorithms to avert the bias, but he won’t wait around to see if they implement change on their own accord. Lately, he’s tried to get his work in front of public officials and policymakers, such as the dozens of state attorneys general and hundreds of school districts nationwide that have sued social media companies over the alleged harms of their products on kids’ mental health.